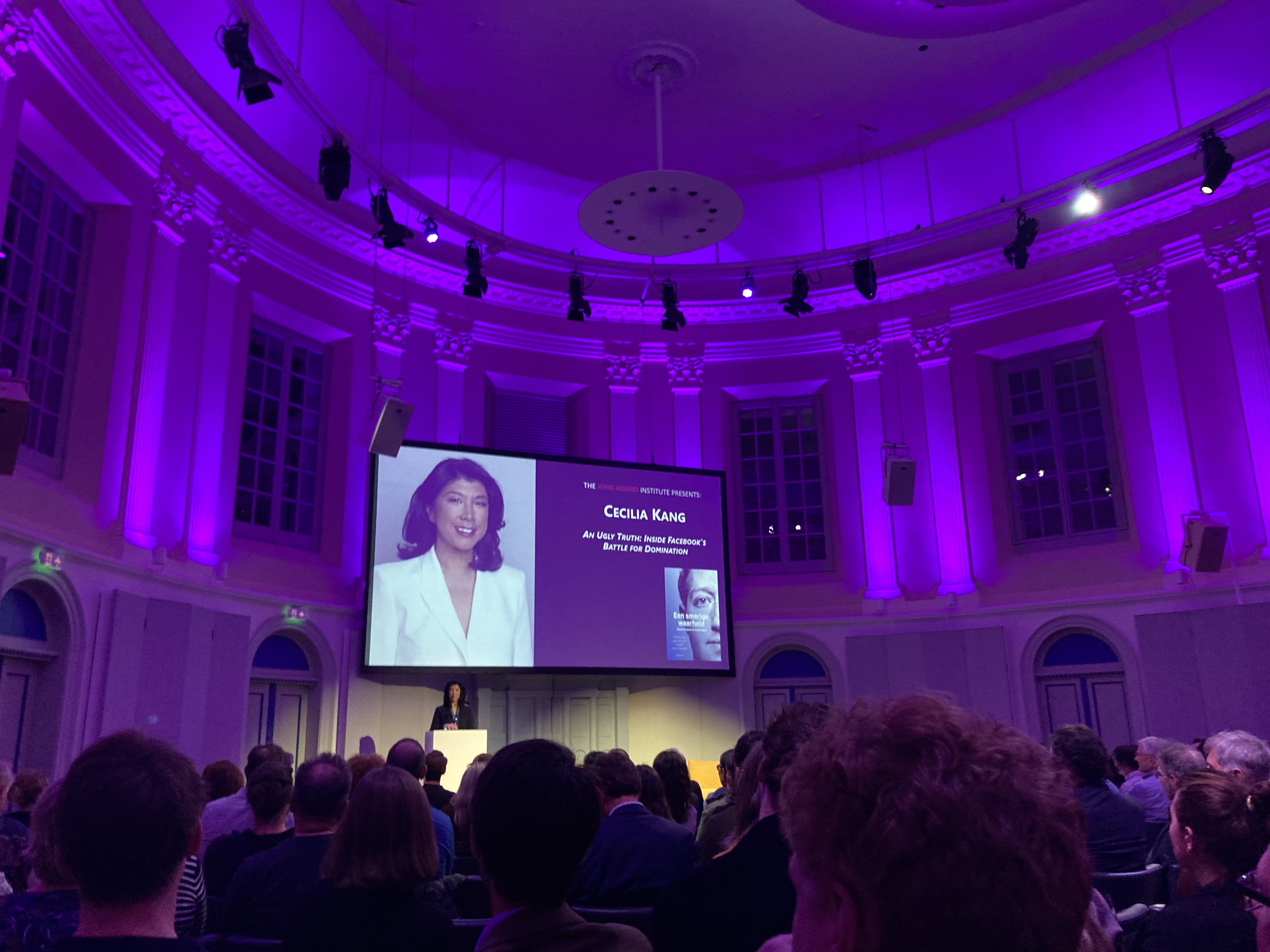

Last week, I attended a conversation with Cecilia Kang, the New York Times journalist who co-authored An Ugly Truth with Sheera Frankel. It was a lot about patterns in Facebook’s decisions, leadership style and press relations.

I was delighted to be back in a conference hall, and it happened to be the one where we hosted Fronteers 2009 and Mobilism 2011, the memories! These events had sponsors like Opera, Internet Explorer 8 and Blackberry. Anyway, I digress, let’s talk about Facebook.

Profit over people

Facebook wants two things, Kang emphasised: connect all people together through technology and grow their business. Sounds reasonable? Maybe, but these goals impact one another in a very specific way. Facebook sells ads. You can get most people to look at ads if the content triggers people, including when it is upsetting, hateful content. Growing business ends up growing hate. The site does bring people together, yes, but it profits most when they are upset together. And, problematically, maximising profit is the end goal, The Ugly Truth shows.

Is bad content a problem? Kind of, but most platforms have some of that and we should probably blame the authors of that content, not Facebook. Is profit a problem? Or maximising it? It isn’t that per se. All companies try to make profit, most try to make a lot. The problem is profit at the expense of people’s wellbeing and safety. This is where the tobacco and oil company comparisons come in. Facebook understands which content angers or harms people. They ran experiments with happier and less depressing newsfeeds. They knew about persecution of Rohingya people in Myanmar, fired up by a politician they gave a voice. They knew about extremists using their platform to organise storming the Capitol. They knew about the medical misinformation that undermined solutions for the global COVID-19 pandemic.

The thing is, extremism, misinformation and hate thrive on amplification, on being widely shared. Facebook’s inaction is so problematic, because it facilitates and accelerates that amplification to make more money. There would be extremists, liars and haters in a world without Facebook, but they would thrive a lot less less. They would thrive less on regular tv, and they would thrive less on a social media platform with more ethical content priorities. There are other platforms too that are based on amplification, which often have similar problems, but this is post is not about them.

The Facebook Papers, a large set of documents released by whistleblower Frances Hauben to a dozen news outlets, clearly confirm the conclusions of An Ugly Truth: Facebook knows what they amplify and don’t care enough to stop. Of course, they contract huge amounts of content moderators and mark COVID-19 information from trusted sources, but leadership decisions consistently put engagement (profit) before safety (moderating harmful content).

The Atlantic’s Adrienne LaFrance:

Again and again, the Facebook Papers show staffers sounding alarms about the dangers posed by the platform—how Facebook amplifies extremism and misinformation, how it incites violence, how it encourages radicalization and political polarization. Again and again, staffers reckon with the ways in which Facebook’s decisions stoke these harms, and they plead with leadership to do more.

And again and again, staffers say, Facebook’s leaders ignore them.

(From: ‘History Will Not Judge Us Kindly’ in The Atlantic)

The Wall Street Journal’s Facebook Files series evolves around the same conclusion in its opening words:

Facebook Inc. knows, in acute detail, that its platforms are riddled with flaws that cause harm, often in ways only the company fully understands. (…)Time and again, the documents show, Facebook’s researchers have identified the platform’s ill effects. Time and again, despite congressional hearings, its own pledges and numerous media exposés, the company didn’t fix them.

(From: ‘The Facebook Files’)

Inaction is a pattern An Ugly Truth confirms. Time after time, when Facebook leadership can choose between what’s good for people and what’s makes them more profit, they choose profit. These aren’t always at odds, but when they are, profit is consistently prioritised. And with that, the amplification of bad content.

Besides amplification of harmful content for profit, Facebook also engages in large scale data collection, as Shoshana Zuboff explained in The Age of Surveillance Capitalism. Facebook tracks even users who deactivated and had shady data sharing deals with device manufacturers.

Journalists have reported on lots and lots of serious problems related to Facebook’s priorities, some of which I linked above. This brings us to another pattern: Facebook’s press teams downsize the problems or outright deny them. They’ll call a story an absurd suggestion, only for it to turn out to be mostly accurate. They will also often point to the competition, which sometimes has similar problems. Or they attack the messenger. When Frances Hauben was testifying, Andy Stone, a Facebook PR person, shamelessly tweeted:

Just pointing out the fact that @FrancesHaugen did not work on child safety or Instagram or research these issues and has no direct knowledge of the topic from her work at Facebook.

Which is why she brought documents, as Jane Chung aptly replied. This time the press team responded childishly, the book also shows that they like to spin stories and deny truths when they see a chance.

The leadership

The book also goes into the people running Facebook and how to see the world. When I think of Mark Zuckerberg’s world view, I think about that time he called people who trusted him with their data “dumb fucks”. I hesitate to reduce a person’s world view to one quote from their past, but reading The Ugly Truth made me feel that his decisions and priorities of today still show he tends to dismiss other people’s safety.

When he addressed Georgetown University, he said he doesn’t want to ‘censor political speech’ and focused on free speech. The ideology seems to be that a president’s freedom to incite violence or spread covid misinformation, and the public’s freedom to ‘judge for themselves’, are more important than people’s safety. Everyone wants freedoms, but the question is whose freedom gets priority.

Mark Zuckerberg, Kang explained in Amsterdam, is inspired by Silicon Valley men like the controversial libertarian Peter Thiel, and not so much by what his own employees tell him. They often don’t bring important things to him, Kang said, as the company has a culture of protecting the leader. People want to be friends with him, not appear too critical. Maybe that’s the case for many CEOs, but it does make one wonder what the company had looked like under different leadership, that took inspiration from different places.

So, what now?

I think everyone gets how Facebook, Instagram, WhatsApp, React and Oculus have their merits. They can and often do bring people together. Make the world a better place. I don’t mean this sarcastically. Ok, I’m not personally so convinced of Oculus, I’m more of a real world person, but I’ll leave that for a later post. These platforms aren’t inherently bad, they bring lots of good to many people. The bad they bring sometimes merely reflects the bad that’s out there in the world. But it’s clear that Facebook’s role is often that of a megaphone, not just a mirror.

Some of the audience questions were along the lines “could we remove the bad parts of Facebook?” This is a tricky question, because of where most of the problems manifest. They aren’t in one team that messes things up, they are right in the business model that pays everyone’s salary. Removing the bad parts might not leave us with much left. There are also countless stories of people trying to “change the company from within”, which has now become a bit of a meme.

More legislative controls are expected, likely in the US and Europe and likely somewhere in the next 5 years or so. Maybe this will get us more of the good parts and less of the bad parts of Facebook.

Until, then, this is my personal list of things we can all do now:

- refuse to work at Facebook; it’s like voting, your individual vote doesn’t matter, but millions of individual votes can push the world in a clear direction, in this case one where Facebook has a hard time finding employees

- quit working at Facebook (I know, controversial), for the same reasons

- stop or reduce usage of Facebook/Meta and its products (including, dare I say it, React)

- help non-tech friends and family move away from Facebook

- stop advertising on Facebook (some companies have)

- help small business friends by building them a simple website outside Facebook’s walled garden

- read up on patterns in tech companies, check out books like An Ugly Truth and Super Pumped (on Uber)

Wrapping up

In conclusion, the issue isn’t that Facebook has harmful content or makes profit, it’s that its leadership consistently decides to prioritise their profit over preventing harm. They know they have problems, but decide against fixing them and aren’t honest about this to the press. This is why we all need a lot less Facebook in our lives, and I urge you to join the movement.

Looking back, the time of Opera, Internet Explorer 8 and Blackberry felt more innocent. The monopolies felt less harmful. I, for one, am very curious how the world will look back at today’s Facebook in ten years time. Let’s hope their patterns will have changed for good.

Comments, likes & shares (14)

Kees de Kooter :mastodon:, Aaron In Iowa, Sia Karamalegos, Fernando Mateus, Andrew Sutherland, Evan, Michelle Barker, Jeff, alwillis@mastodon.social and martingoeg@mastodon.social liked this

Michelle Barker and Kristin Henry reposted this

the genocides, the medical misinformation during the pandemic, the elections of multiple fascists, they knew this was happening and still chose their profit over our societies, looking away from the harms.

@hdv This sentence really hits on the core issue of capitalist media, true on social media but also more broadcast media too I think:

"The site does bring people together, yes, but it profits most when they are upset together."

The "together" parts are more relevant to social media of course, but rage engagement is a real problem more broadly.