I recommend everyone to browse with content blockers turned on. The goal: protection against tracking. Safari and Firefox have good tracking protection features. With more people using these features, we should test our sites with content blockers turned on.

Content blocking

In the age of surveillance capitalism, some browsers have started getting more aggressive in how they block ad trackers to protect the privacy of their users.

Firefox has had Tracking Protection since version 42. It works with a blacklist of domains. When something is blocked, it will show up in the Dev Tools Console (“The resource at X was blocked, because tracking protection is enabled”).

Safari not only can block trackers for you, it also allows third parties to define blocking behaviour. Third party blockers can match patterns in URLs and detect things like the domain a script is loaded on (same as site vs not same as site, the latter means third party). When a tracker is identified, developers can choose what to do: block it altogether, just block cookies or hide elements from the page. Users can install one or multiple content trackers. There is a “load without content blockers” feature (long press reload on iOS), but note users may be unaware of it. I know I was until very recently.

What can break

Four examples I encountered in the last few months:

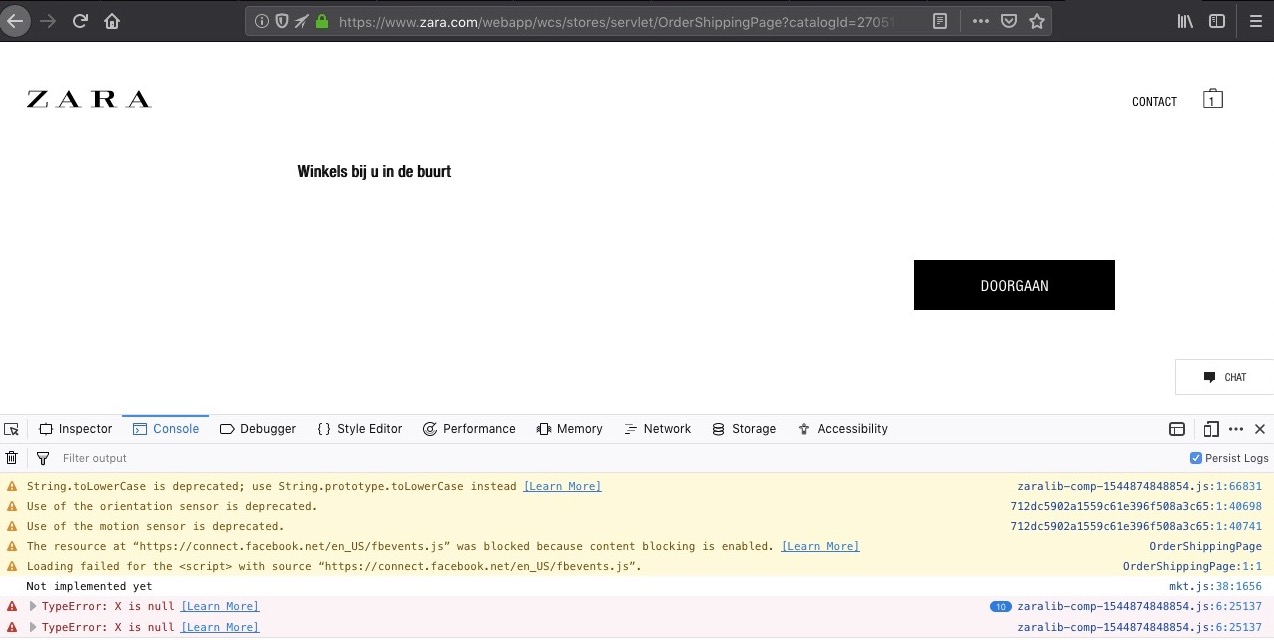

- I wanted to purchase clothing on Zara’s website, to pick up in a store. On the part of the form where I had to select which store, nothing showed. I could also not go to the next step.

TypeError: X is nullwas thrown in the browser Console. A Facebook tracking script was blocked by my content blocker, presumably the reason for the script to fail. - I wanted to find opening times at my local supermarket. Search did not yield results until I turned off content blockers.

- I wanted to open a hamburger menu. It did not open. (This one is very common, actually)

- A link did not work at all.

On this page, I could not select a store or press continue, because Facebook’s tracking script did not load

On this page, I could not select a store or press continue, because Facebook’s tracking script did not load

To understand the last example better, see Stephanie Hobson’s Google Analytics, Privacy, and Event Tracking, which shows how Google Analytics recommended tracking code breaks links for people who block the tracker.

We should not need to track to take people’s money. Or to let them find opening times, open a hamburger menu or follow a link. Metrics can be useful, but they are rarely questioned.

A working website: some strategies

I understand companies want behavioral data in order to improve their sites. But if it puts a stop to taking people’s actual money, doesn’t that defeat the purpose of why you have a website as a company? Is figuring out how many people open your hamburger menu more important than having the feature work at all?

Use no scripts

This one is easy in theory. It is hard in the reality of modern web development, because people will laugh at the suggestion. If your site does not rely on JavaScript to build features like to take people’s money, search for opening times, show a navigation on mobile or go to other pages, these features can potentially just work.

Progressive enhancement

If you manage to develop in a way that does not assume JavaScript (or CSS), you’re likely doing progressive enhancement of some sort. It means that when you build new features, you start with the very basic building blocks, like headings and lists. Simple HTML that describes the feature in a way the most basic browsers understand. You then turn it into something fancy, using web technology a modern as you like. For example, use Web Components to make it tabs, as Andy Bell described. If the fancy thing breaks, the basic building block likely still works.

Not only should progressive enhancement help make your site more robust and resilient, if your whole team can think this way, it can help setting priorities sensibly. See also Resilient Web Design, Jeremy Keith’s free book on building resilient web sites and Dear developer, a talk by Charlie Owen.

Conclusion

To build good websites, I think we should prioritise working websites above gathering potentially useful data (if doing that at all). As developers, we should keep in mind trackers could get blocked and, consequently, never rely on their availability.

Comments, likes & shares

No webmentions about this post yet! (Or I've broken my implementation)