Large Language Models (LLMs) and tools based on them (like ChatGPT) are all some in tech talk about today. I struggle with the optimism I see from businesses, the haphazard deployment of these systems and the seemingly ever-expanding boundaries of what we are prepared to call “artificially intelligent”. I mean, they bring interesting capabilities, but arguably they are neither artificial, nor intelligent.

Atificial intelligence, as a field, isn't easily defined. There's many different things that fall under the umbrella. It attracts people with a wide range of interests. And it has all sorts of applications, from physical robots to neural networks and natural language processing. Today, there is a lot of hype around Language Models (LMs), a specific technique in the field of “artifical intelligence”, which Emily Bender and colleagues define as ‘systems trained on string prediction tasks’ in their paper ‘On the dangers of stochastic parrots: can language models be too big?’ (one of the co-authors was Timnit Gebru, who had to leave her AI ethics position at Google over it). Hype isn't new in tech, and many recognise the patterns in vague and overly optimistic thoughtleadership (‘$thing is a bit like when the printing press was invented’, ‘if you don't pivot your business to $thing ASAP, you'll miss out’). Beyond the hype, it's essential to calm down and understand two things: do LLMs actually constitute AI and are what sort of downsides could they pose to people?

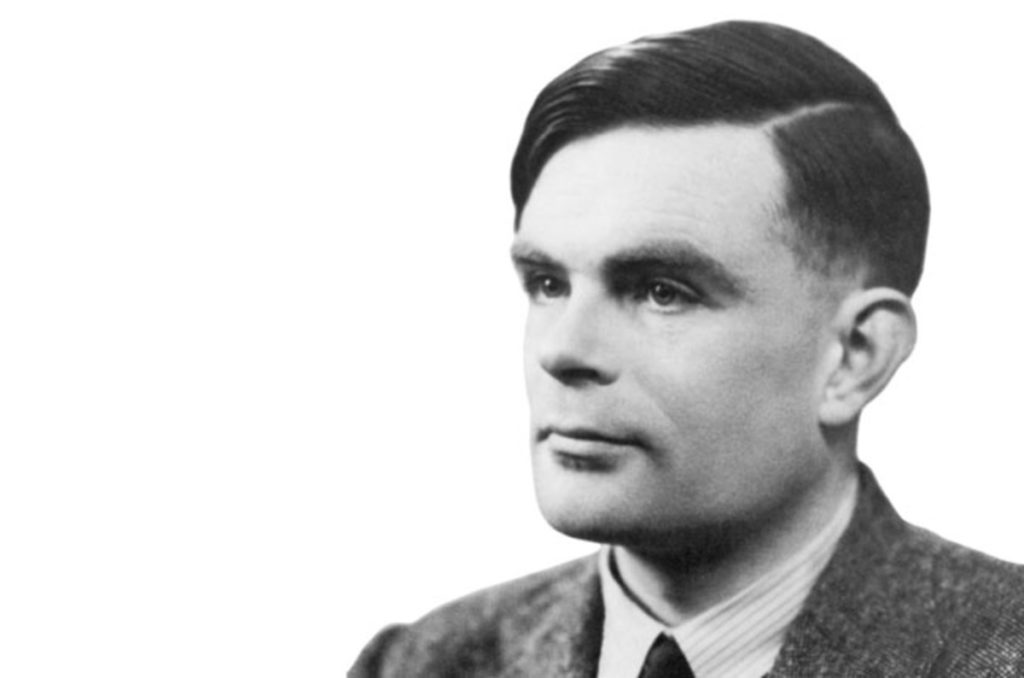

Artificial intelligence, in one of its earliest definitions, is the study of things that are in language indistinguishable from humans. In 1950, Alan Turing famously proposed an imitation game as a test for this indistinguishability. More generally, AIs are systems that think or act like humans, or that think or act rationally. According to many, including OpenAI, the company behind ChatGPT and Whisper, large language models are AI. But that's a company: a non-profit with a for-profit subsidiary—of course they would say that.

Not artificial

In one sense, “artificial” in “AI” means non-human. And yes, of course, LLMs are non-human. But they aren't artificial in the sense that their knowledge has clear, non-artificial origins: the input data that they are trained with.

OpenAI stopped disclosing openly where they get their data since GPT-3 (how Orwellian). But it is clear that they gather data from all over the public web, places like Reddit and Wikipedia. Earlier they used filtered data

from Common Crawl.

First, there is the long term consequences for quality. If this tooling results in more large scale flooding the web with AI generated content, and it uses the contents of that same web to continue training the models, it could result in a “misinformation shitshow”. It also seems like a source that can dry up once people stop asking questions on the web to interact directly with ChatGPT.

Second, it seems questionable to build off the fruits of other people's work. I don't mean off your employees, that's just capitalism—I mean other people that you scrape input data from without their permission. It was controversial when search engines took the work from journalists, this is that on steroids.

Not intelligent

What about intelligence? Does it make sense to call LLMs and the tools based on them intelligent?

Alan Turing

Alan Turing

Alan Turing suggested (again, in 1950) that machines can be said to think if they manage to trick humans such that ‘an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning’. So maybe he would have regarded ChatGPT as intelligent? I guess someone familiar with ChatGPT's weaknesses could easily ask the right questions and identify it as non-human within minutes. But maybe it's good enough already to fool average interrogators? And a web flooded with LLM-generated content would probably fool (and annoy) us all.

Still, I don't think we can call bots that use LLMs intelligent, because they lack intentions, values and a sense of the world. The sentences systems like ChatGPT generate today merely do a very good job at pretending.

The Stochastic Parrots paper (SP) explains why pretending works:

our perception of natural language text, regardless of how it was generated, is mediated by our own linguistic competence

(SP, 616)

We merely interpret LLMs as coherent, meaningful and intentional, but it's really an illusion:

an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.

(SP, 617)

They can make it seem like you are in a discussion with an intelligent person, but let's be real, you aren't. The system's replies to your chat prompt aren't the result of understanding or learning (even if the technical term for the process of training these models is ‘deep learning’).

But GPT-4 can pass standardised exams! Isn't that intelligent? Arvind Narayanan and Sayash Kapoor explain the challenge of standardised exams happens to be one large language models are good at by nature:

professional exams, especially the bar exam, notoriously overemphasize subject-matter knowledge and underemphasize real-world skills, which are far harder to measure in a standardized, computer-administered way

Still great innovation?

I am not too sure. I don't want to spoil any party or take away useful tools from people, but I am pretty worried about large scale commercial adoption of LLMs for content creation. It's not just that people can now more easily flood the web with content they don't care about just to increase their own search engine positions. Or that the biases in the real world can now propagate and replicate faster with less scrutiny (see SP 613, which shows how this works and suggests more investment in curating and documenting training data). Or that criminals use LLMs to commit crimes. Or that people may use it for medical advice and the advice is incorrect. In Taxonomy of Risks Posed by Language Models, 21 risks are identified. It's lots of things like that, where the balance is off between what's useful, meaningful, sensible and ethical for all on the one hand, and what can generate money for the few on the other. Yes, both sides of that balance can exist at the same time, but money often impacts decisions.

And that, lastly, can lead to increased inequity. Monetarily, e.g. what if your doctor's clinic offers consults with an AI by default, but you can press 1 to pay €50 to speak to a human (as Felienne Hermans warned Volkskrant readers last week)? And also in terms of the effect of computing on climate change: most large language models benefit those who have the most, while their effect (on climate change) threatens marginalised communities (see SP 612).

Wrapping up

I am generally very excited about applications of AI at large, like in cancer diagnosis, machine translation, maps and certain areas of accessibility. And even of capabilities that LLMs and tools based on them bring. This is a field that can genuinely make the world better in many ways. But it's super important to look beyond the hype and into the pitfalls. As a lot of my feed naturally have optimist technologists, I have consciously added many more critical journalists, scientists and thinkers to my social feeds. If this piques your interest, one place to start could be the Distributed AI Research Institute (DAIR) on Mastodon. I also recommend the Stochastic Parrots paper (and/or the NYMag feature on Emily Bender's work). If you have any recommend reading or watching, please do toot or email.

Comments, likes & shares (65)

Egor Kloos, Joe Lanman, Nick Gard, Baldur Bjarnason, Evan, Aaron Gustafson, Francesco Schwarz, Olu (they/them), こいでさんはお休み中, Michelle Barker, Adrian Egger, Kees de Kooter :mastodon:, Dr. Angus Andrea Grieve-Smith, An Cao, Jason Heppler ⧖, Alexander Lehner, CPACC, Paul Hebert, Guillaume Deblock, Steffen Kennepohl, kaiserkiwi :kiwibird:, Jan Andrle, Allison Ravenhall, Daniel Torres, Livio Liechti, Elly Loel ✨🌱, Jelmer and Smashing Magazine liked this

Egor Kloos, ~/j4v1, Baldur Bjarnason, Olu (they/them), CnEY?!, Ben Myers 🦖, Eric Eggert, ysbreker, Kees de Kooter :mastodon:, Joost van der Borg, Paul van Buuren, Baldur Bjarnason, Michelle Barker, Ron K Jeffries social, Guillaume Deblock, Alexander Lehner, CPACC, Steffen Kennepohl, Julius Schröder, Jan Andrle, Oscar, Tim Severien, Sietske van Vugt, Strypey and Jelmer reposted this

@hdv great post 👍

@darth_mall thanks!

@hdv Thanks for writing this, and for all the links/notes.

@hdv best post on this topic in a long time, thanks

@joostvanderborg thank you, glad it helps!

@sciloqi you're too kind, thanks!

@hdv

> We merely interpret LLMs as coherent, meaningful and intentional, but it's really an illusion

I think this can be applied to humans as well.

It's easy to see kids are mimicking inputs from others in their early years. "Aha she does X or says Y because someone did X or showed Y last week".

Now either there's a point in early life where the spark of consciousness/intelligence ignites OR it's just an illusion all the way down.

@hdv Meaning, as kids grow older, it becomes ever harder to spot from who they learned which response.

They can't even spot it themselves because it's a giant spaghetti of information from which the brain derives its responses.

The illusion of intelligence at that point is complete for both the outside observer as the individual.

So from this perspective, what is intelligence? And how different are we _really_ from these models?

Haha I swear I was first 😂 https://www.theguardian.com/commentisfree/2023/mar/30/artificial-intelligence-chatgpt-human-mind

The problem with artificial intelligence? It’s neither artificial nor intelligent@rikschennink it's a really good point. Can see it in music too, there is lots of music today that is not created by LLMs, but still a boring copy of what altrady exists.

There are theories that see the mind as a machine (it's 'just' neurons), I get it may eventually come down to that, but I feel it's overly optimistic that we'd be able to replicate that (or that LLMs are anything like that)

@hdv Your article is better. Just saying.