This week I attended Accessibility London. It took place at Sainsbury’s headquarters, who were kind enough to host the event.

The Accessibility Peace Model

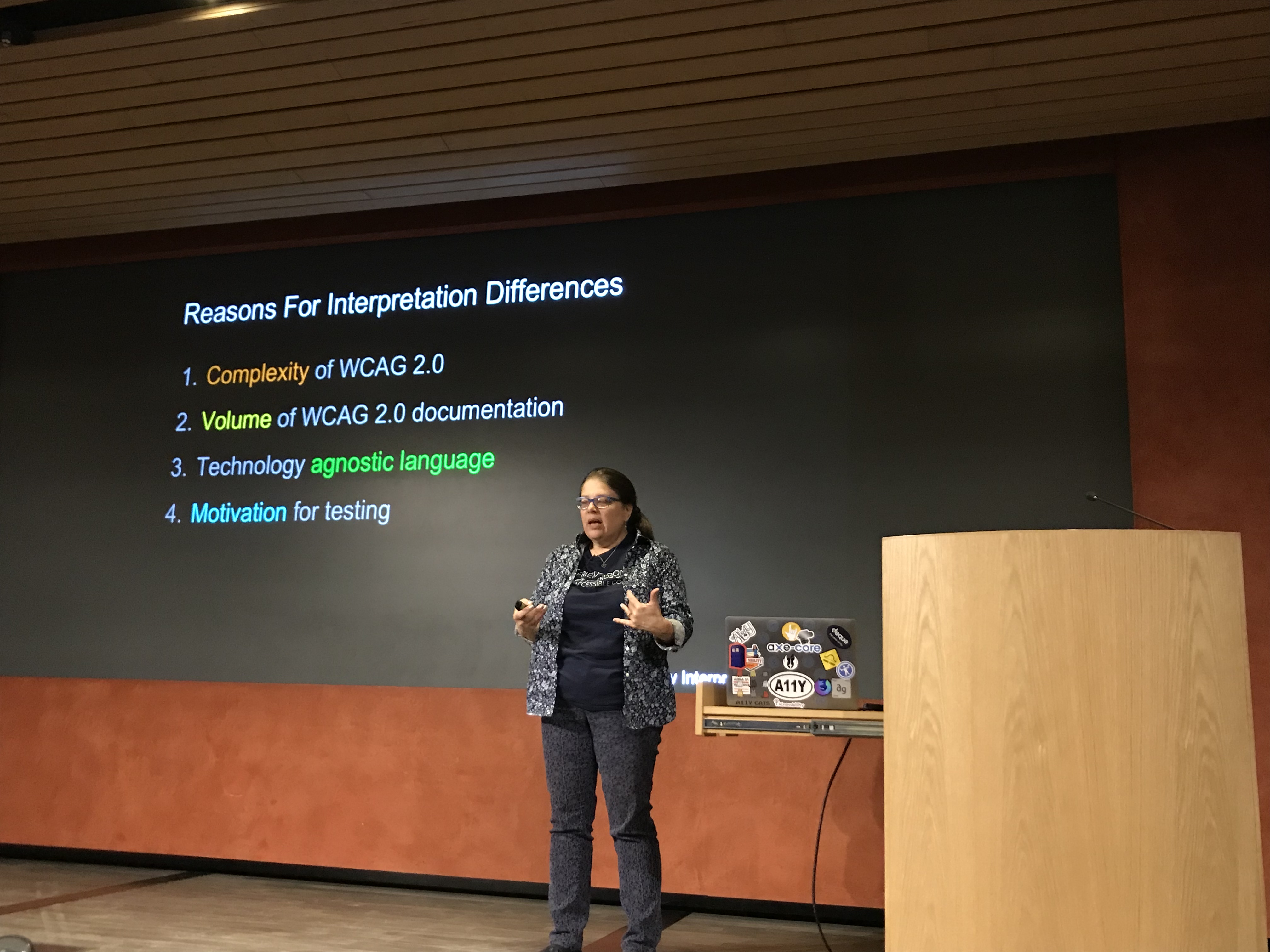

Glenda Sims talked about what she and Wilco Fiers like to call the accessibility interpretation problem: people disagree about how (much) accessibility should be implemented and tested. There are normative guidelines (WCAG), but experts have different views on what complying to the norm means. Glenda explained that this is ok, ‘there are multiple valid ways to use WCAG’.

Glenda Sims on why interpretations differ

Glenda Sims on why interpretations differ

She explained a scale of interpretation styles:

- bare minimum (only where WCAG says ‘this is normative’)

- optimised (beyond strictly normative, also tries to act in spirit of the whole document and look at the Understanding and Techniques pages)

- idealised (tries to look beyond what’s currently possible and maximises accessibility).

Different people at your team or your client’s teams will be on different places of that scale. Knowing this is the case can help think about expectations. I found this a super refreshing talk, it resonated a lot with my experiences. The full whitepaper can be found on Deque’s website.

The history and future of speech

Léonie Watson walked us through the history and future of people talking to computers (Léonie’s slides). From synthetic voices that existed way over 90 years ago to modern computer voices that can sing reasonable covers of our favourite pop songs. There are various W3C standards related to speech, Léonie explained, like Voice XML and the Speech Synthesis Markup Language, and there is a CSS speech spec (‘why should I have to listen to everything in the same voice?’, Lèonie asked. A great point.) As far as I know, nobody works on CSS Speech anymore, sadly.

Léonie also explained how we can design for voice with the Inclusive Design Principles in mind, with useful do’s and don’ts. At the end, she left us with questions about embedding privacy, security, identity and trust in voice assistants.

This was an excellent event with great speakers, I was so glad I was able to attend.

Comments, likes & shares

No webmentions about this post yet! (Or I've broken my implementation)